#OllamaAI Translate (Offline)

Ollama official website address

Preface: This translation source is offline AI translation, you need to download Ollama software and AI offline large model

Since Ollama does not yet provide an interface, it can only be operated through the console/terminal.

If there are many users who require page operations, we will consider developing the interface. Feedback is welcome ~

AI model information As time goes by, the official website may change, this is for reference only, please refer to the official documents for details

AI Model - Official Introduction Document

| Model name | Model model | Model size | Command execution |

|---|---|---|---|

| Llama 3 | 8B | 4.7GB | ollama run llama3 |

| Llama 3 | 70B | 40GB | ollama run llama3:70b |

| Phi-3 | 3,8B | 2.3GB | ollama run phi3 |

| Mistral | 7B | 4.1GB | ollama run mistral |

| Neural Chat | 7B | 4.1GB | ollama run neural-chat |

| Starling | 7B | 4.1GB | ollama run starling-lm |

| Code Llama | 7B | 3.8GB | ollama run codellama |

| Llama 2 Uncensored | 7B | 3.8GB | ollama run llama2-uncensored |

| LLaVA | 7B | 4.5GB | ollama run llava |

| Gemma | 2B | 1.4GB | ollama run gemma:2b |

| Gemma | 7B | 4.8GB | ollama run gemma:7b |

| Solar | 10.7B | 6.1GB | ollama run solar |

Prerequisites for use

Noun introduction: RAM (Random Access Memory) usually refers to the memory stick in the computer

According to the official website, the AI model requires computers

7B model requires at least 8 GB of available RAM to run

13B model requires at least 16 GB of available RAM to run

33B model requires at least 32 GB of available RAM to run

We tested these three models with 24G computer memory: Gemma 2B, Llama 3 8B, llama 2 Uncensored 7B

Among them, Gemma 2B has the fastest translation speed, and the translation effect is pretty good in most cases. This is not bad if the accuracy of the translation results is not so high. For other models, the translation quality will be higher, but the speed will be reduced accordingly. You can choose according to your actual testing situation.

1. Download and install Ollama

Ollama official website:https://ollama.com/

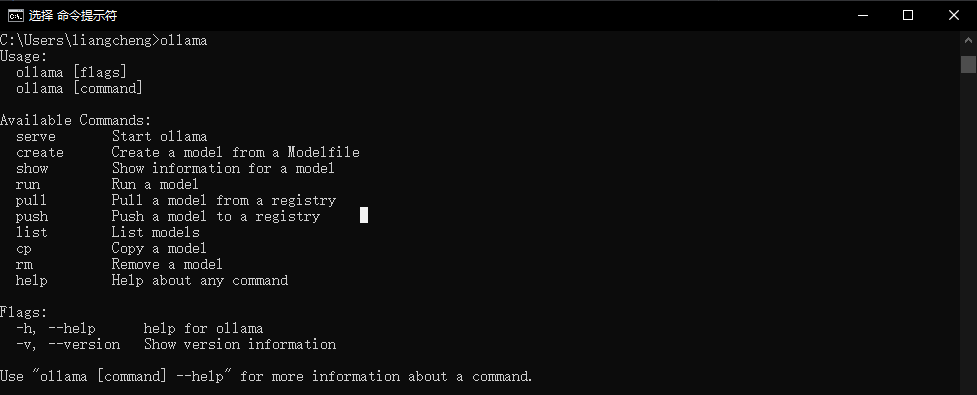

After the installation is complete, open the computer console/terminal and enter: ollama

If the following interface appears, the installation is successful.

2. Download the AI large model through Ollama

Open the computer console/terminal and copy the corresponding model command execution in the model list above

Ollama other commands

View the list of currently downloaded models

ollama listDownload model (This command can also be used to update local models. Only differences will be pulled.)

ollama pull xxxDelete model

ollama rm xxx